Cluster

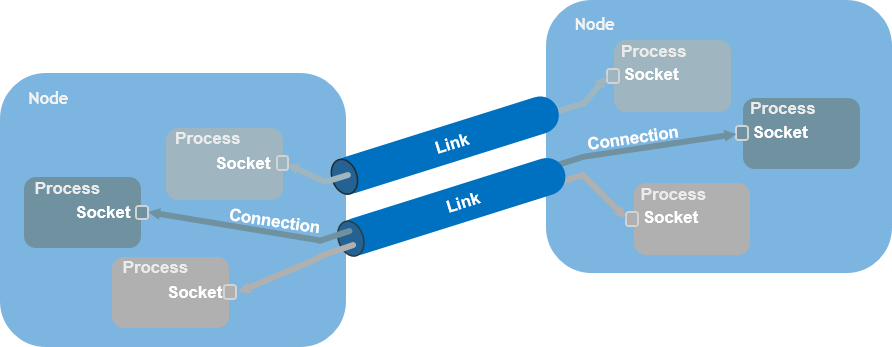

Link

A TIPC cluster consists of nodes interconnected with links. A node can be either a physical processor, a virtual machine or a network namespace, e.g., in the form of a Docker container. A link constitutes a reliable packet transport service, sometimes referred to as an "L2.5" data link layer.

- It guarantees delivery and sequentiality for all packets.

- It acts as a trunk for inter-node connections, and keeps track of those.

- When all contact to the peer node is lost all sockets with connections to that peer are notified so they can break the connections.

- Each endpoint keeps track of the peer node’s address bindings in the local replica of the binding table.

- When contact to the peer node is lost all bindings from that peer are purged and service tracking events issued to all matching subscribers.

- When there is no regular data packet traffic each link is actively supervised by probing/heartbeats.

- Failure detection tolerance is configurable from 50 ms to 30 seconds, - default setting is 1.5 seconds.

- For performance and redundancy reasons it is possible to establish two links per node pair, - on separate network interfaces.

- A link pair can be configured for load sharing or active-standby.

- If a link fails there will be a disturbance-free failover to the remaining link, if any.

Cluster Membership

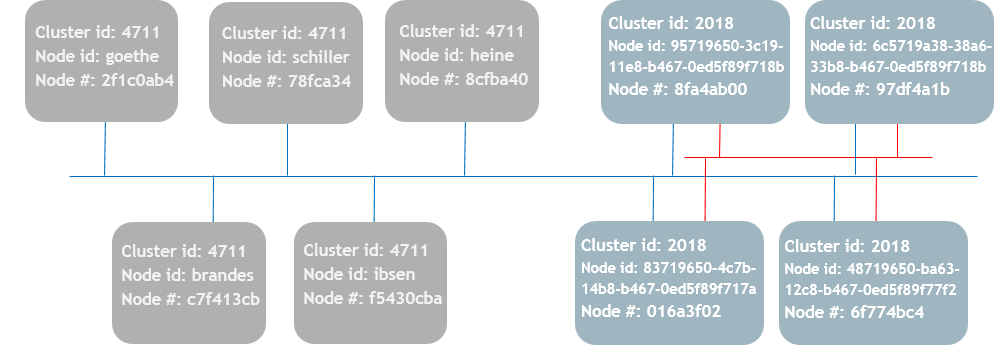

A cluster has a 32 bit cluster identity, which determines the cluster setup.

- The cluster identity can be assigned by the user if anything different from the default value is needed, e.g., if there is more than one cluster on the same subnet.

- All nodes using the same cluster identity will establish mutual links.

- Neighbor discovery is performed by UDP multicast or L2 broadcast, when available.

- If broadcast/multicast support is missing in the infrastructure, discovery can be performed by explicitly configured IP addresses

Each node has a cluster unique node identity.

- In versions before Linux 4.17 this is a 32 bit structured address which must be set by the user.

- In later versions is it a 128 bit field with no internal structure or restrictions.

- This identity is by default set by the system, based on a MAC or IP address.

- It can also be set by the user, e.g., based on a host name or a UUID.

- The identity is internally hashed into a guaranteed unique 32 bit node address, which becomes the node address used by the protocol.

- The two identity formats are compatible, and can be used in the same cluster.

Cluster Scalability

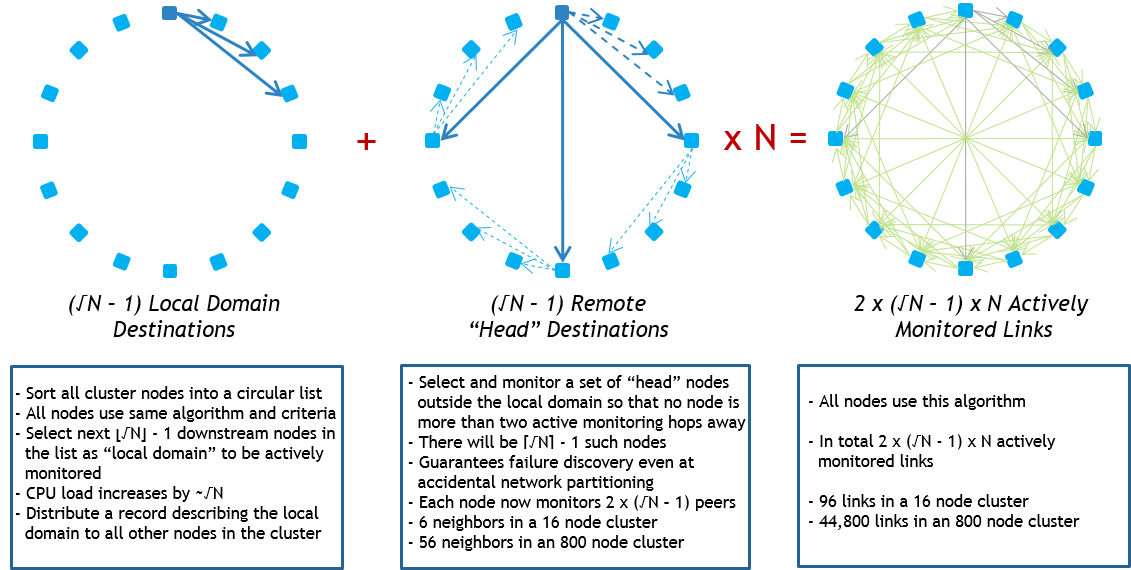

Since Linux 4.7, TIPC comes with a unique, patent pending, auto-adaptive hierarchical neighbor monitoring algorithm. This makes it possible to establish full-mesh clusters of up to 1000 nodes with a failure discovery time of 1.5 seconds, which it in smaller clusters can be made much shorter.

More details about this algorithm can be found in the Overlapping Ring Monitoring slide set, which was also presented at the netdev 2.1 conference in Montreal, Canada, April 2017. A white paper can be downloaded from the TIPC project page.